06.1.Observe hidden layers

Code by karbon

This notebook is a modified version of 04.2.MNIST and CNN.ipynb in order to observe the hidden layers.

1 | from __future__ import absolute_import, division, print_function, unicode_literals |

Import MNIST dataset and preprocess the dataset

1 | mnist = keras.datasets.mnist |

Build the model and train

This example is LeNet-5.

1 | model = keras.Sequential([ |

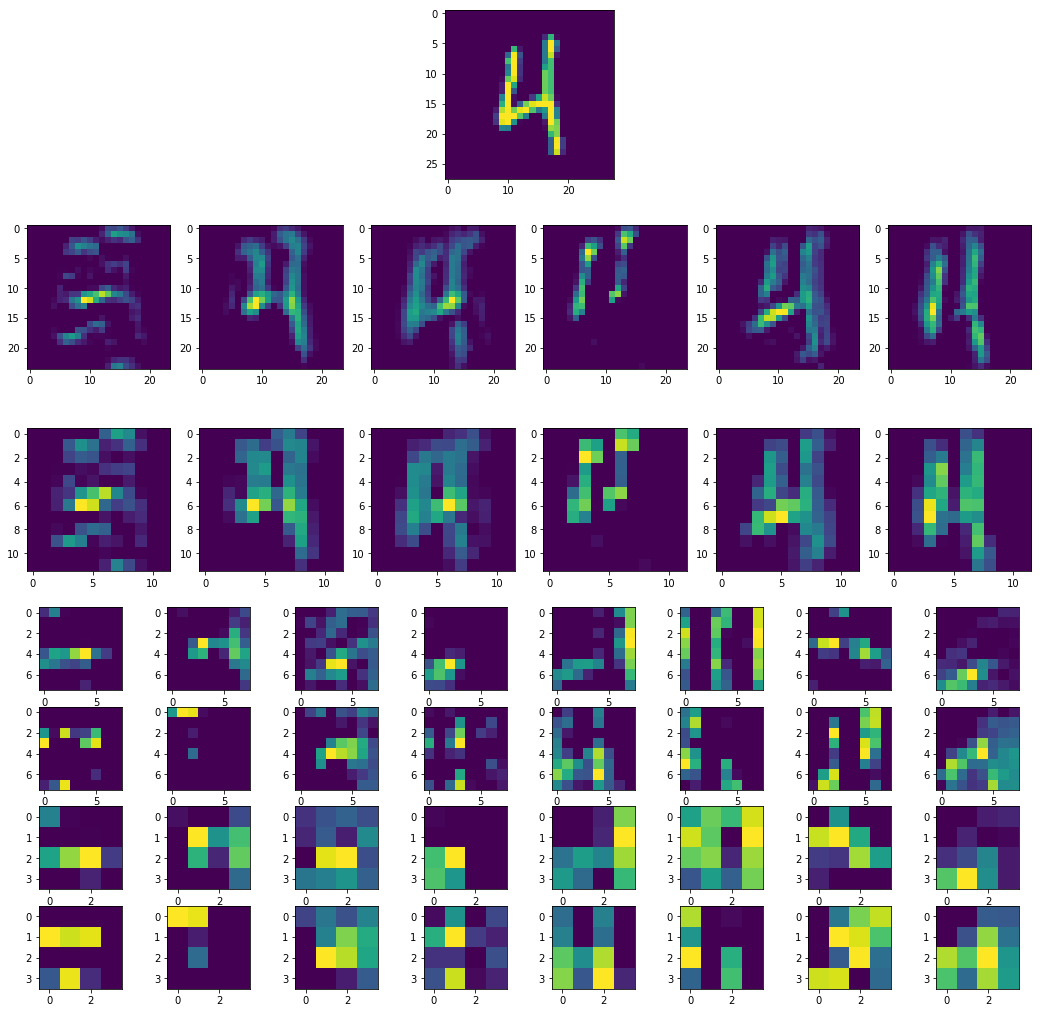

Make prediction and observe the hidden layers

1 | model.summary() |

1 | num = random.randint(0, len(test_images)) |

06.2.Better training methods

Attention! Before running this notebook, you need to run 05.1.ReadAnnotationsFromJSON.ipynb before

1 | from __future__ import absolute_import, division, print_function, unicode_literals |

Import dataset and preprocessing

Because our dataset is a bit large, RAM may not be enough to directly load all images. We can use flow_from_dataframe to load our dataset. (click here to read more about this function)

First, load labels from our .csv file. We use pandas.read_csv, it loads csv file to DataFrame format.

1 | df = pd.read_csv("./dataset/resizedFullGarbageDataset/train.csv", encoding='gbk', dtype=str) |

Then, use keras.preprocessing.image.ImageDataGenerator to load trainset.

You can add data augumentation settings in this function. Read more about this function.

1 | batch_size = 32 #number one time |

1 | names = trainset.class_indices |

trainset[i] has two parts. trainset[i][0] is the i-th batch of image, while trainset[i][1] is one-hot encodings which represents classes.

1 | plt.figure(figsize=(16,8)) |

example:

Import a pre-trained model without prediction layers as feature extractor

1 | input_shape = (sizeX, sizeY, 3) |

Add prediction layers

1 | x = base_model.output |

Define optimizer and compile the model

1 | def schedule(epoch): #change the length of every step according to the epoch |

Start learning

Because the dataset is large, it may take a very long time.

1 | STEP_SIZE_TRAIN=trainset.n//trainset.batch_size |

Evaluate the model

1 | model_finetune.evaluate_generator(testset,verbose=1) #add more layer |

Save the trained model

1 | modelname = 'finetuneExample0-9964' |